Russian Scientists Reconstruct Dynamics of Brain Neuron Model Using Neural Network

Researchers from HSE University in Nizhny Novgorod have shown that a neural network can reconstruct the dynamics of a brain neuron model using just a single set of measurements, such as recordings of its electrical activity. The developed neural network was trained to reconstruct the system's full dynamics and predict its behaviour under changing conditions. This method enables the investigation of complex biological processes, even when not all necessary measurements are available. The study has been published in Chaos, Solitons & Fractals.

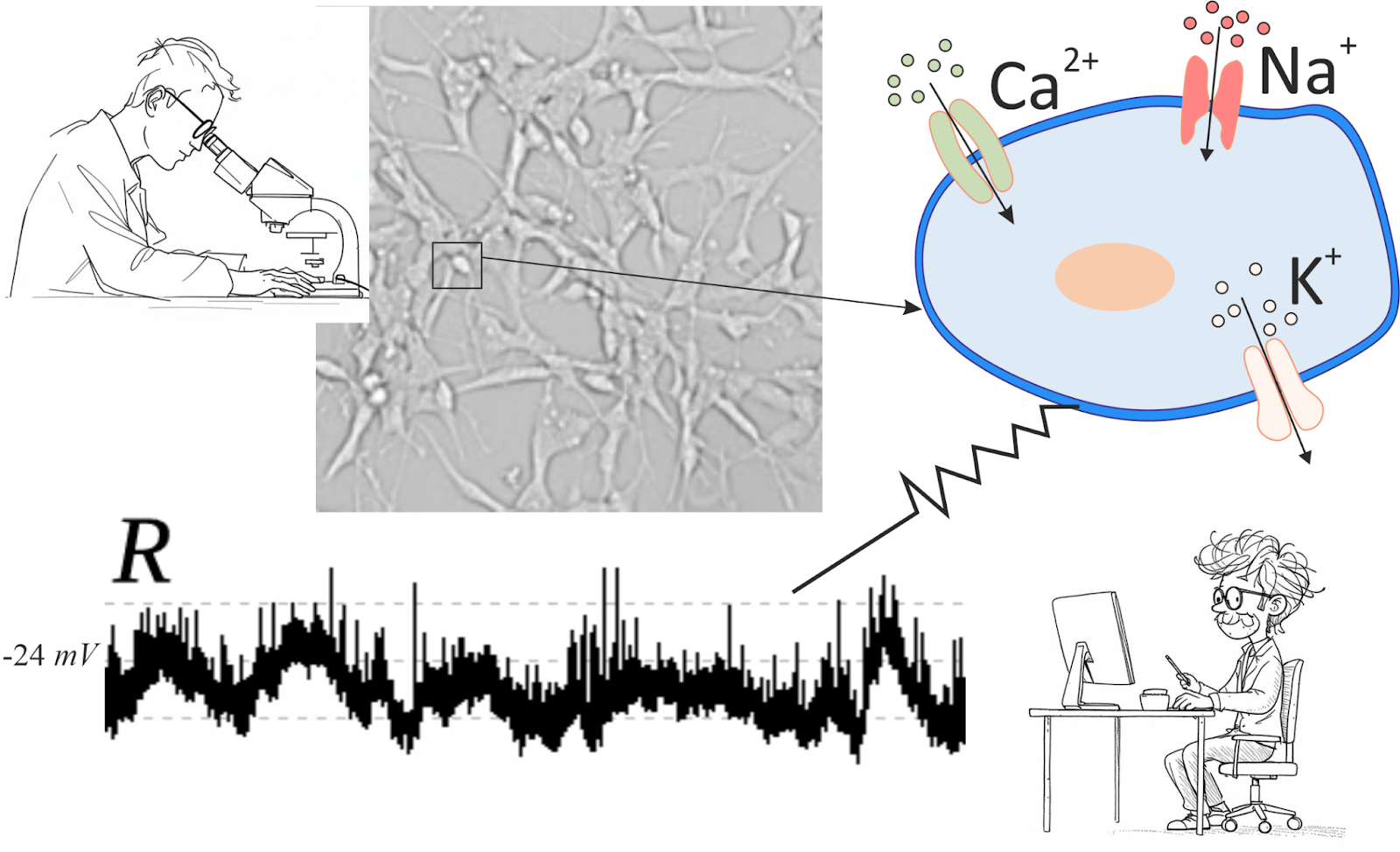

Neurons are cells that enable the brain to process information and transmit signals. They communicate through electrical impulses, which either activate neighbouring neurons or slow them down. Each neuron has a membrane that allows charged particles, known as ions, to pass through channels in the membrane, generating electrical impulses.

Mathematical models are used to study the function of neurons. These models are often based on the Hodgkin-Huxley approach, which allows for the construction of relatively simple models but requires a large number of parameters and calculations. To predict a neuron's behaviour, several parameters and characteristics are typically measured, including membrane voltage, ion currents, and the state of the cell channels. Researchers from HSE University and the Saratov Branch of the Kotelnikov Institute of Radioengineering and Electronics of the Russian Academy of Sciences have demonstrated the possibility of considering changes in a single control parameter—the neuron's membrane electrical potential—and using a neural network to reconstruct the missing data.

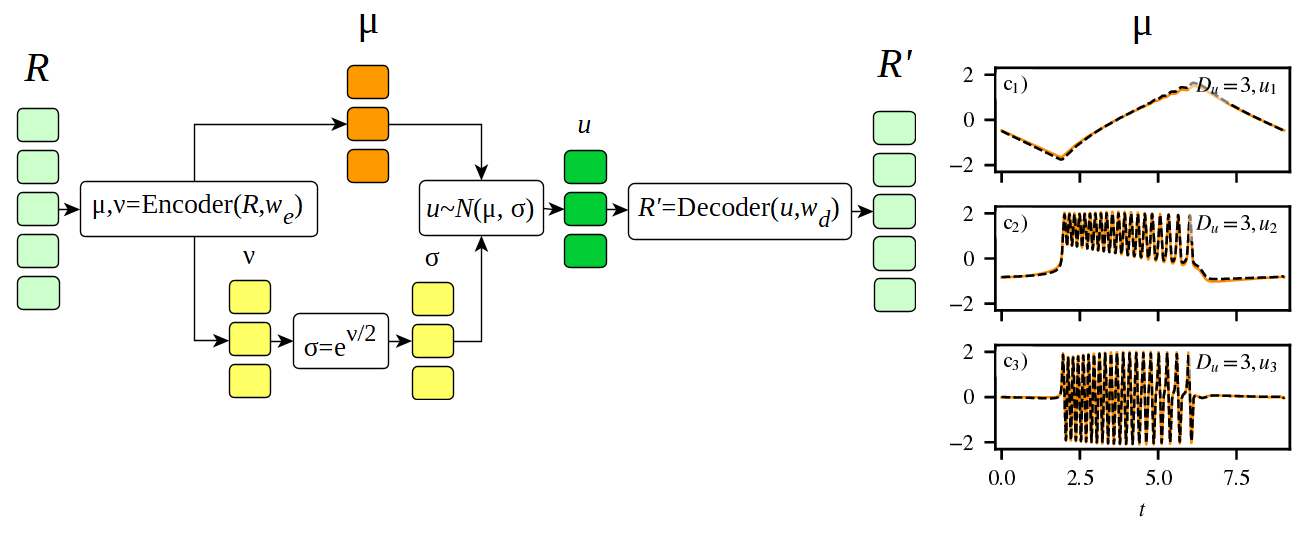

The proposed method consisted of two steps. First, changes in a neuron's potential over time were analysed. This data was then fed into a neural network—a variational autoencoder—that identified key patterns, discarded irrelevant information, and generated a set of characteristics describing the neuron's state. Second, a different type of neural network—neural network mapping—used these characteristics to predict the neuron's future behaviour. The neural network effectively took on the functions of a Hodgkin-Huxley model, but instead of relying on complex equations, it was trained on the data.

'With the advancement of mathematical and computational methods, traditional approaches are being revisited, which not only helps improve them but can also lead to new discoveries. Models reconstructed from data are typically based on low-order polynomial equations, such as the 4th or 5th order. These models have limited nonlinearity, meaning they cannot describe highly complex dependencies without increasing the error,' explains Pavel Kuptsov, Leading Research Fellow at the Faculty of Informatics, Mathematics, and Computer Science of HSE University in Nizhny Novgorod. 'The new method uses neural networks in place of polynomials. Their nonlinearity is governed by sigmoids, smooth functions ranging from 0 to 1, which correspond to polynomial equations (Taylor series) of infinite order. This makes the modelling process more flexible and accurate.’

Typically, a complete set of parameters is required to simulate a complex system, but obtaining this in real-world conditions can be challenging. In experiments, especially in biology and medicine, data is often incomplete or noisy. The scientists demonstrated by their approach that using a neural network makes it possible to reconstruct missing values and predict the system's behaviour, even with a limited amount of data.

'We take just one row of data, a single example of behaviour, train a model on it, and incorporate a control parameter into it. Imagine it as a rotating switch that can be turned to observe different behaviours. After training, if we start adjusting the switch—ie, changing this parameter—we will observe that the model reproduces various types of behaviours that are characteristic of the original system,' explains Pavel Kuptsov.

During the simulation, the neural network not only replicated the system modes it was trained on but also identified new ones. One of these involves the transition from a series of frequent pulses to single bursts. Such transitions occur when the parameters change, yet the neural network detected them independently, without having seen such examples in the data it was trained on. This means that the neural network does not just memorise examples; it actually recognises hidden patterns.

'It is important that the neural network can identify new patterns in the data,’ says Natalya Stankevich, Leading Research Fellow at the Faculty of Informatics, Mathematics, and Computer Science of HSE University in Nizhny Novgorod. 'It identifies connections that are not explicitly represented in the training sample and draws conclusions about the system's behaviour under new conditions.'

The neural network is currently operating on computer-generated data. In the future, the researchers plan to apply it to real experimental data. This opens up opportunities for studying complex dynamic processes where it is impossible to anticipate all potential scenarios in advance.

The study was carried out as part of HSE University's Mirror Laboratories project and supported by a grant from the Russian Science Foundation.

See also:

HSE Psycholinguists Launch Digital Tool to Spot Dyslexia in Children

Specialists from HSE University's Centre for Language and Brain have introduced LexiMetr, a new digital tool for diagnosing dyslexia in primary school students. This is the first standardised application in Russia that enables fast and reliable assessment of children’s reading skills to identify dyslexia or the risk of developing it. The application is available on the RuStore platform and runs on Android tablets.

Physicists Propose New Mechanism to Enhance Superconductivity with 'Quantum Glue'

A team of researchers, including scientists from HSE MIEM, has demonstrated that defects in a material can enhance, rather than hinder, superconductivity. This occurs through interaction between defective and cleaner regions, which creates a 'quantum glue'—a uniform component that binds distinct superconducting regions into a single network. Calculations confirm that this mechanism could aid in developing superconductors that operate at higher temperatures. The study has been published in Communications Physics.

Neural Network Trained to Predict Crises in Russian Stock Market

Economists from HSE University have developed a neural network model that can predict the onset of a short-term stock market crisis with over 83% accuracy, one day in advance. The model performs well even on complex, imbalanced data and incorporates not only economic indicators but also investor sentiment. The paper by Tamara Teplova, Maksim Fayzulin, and Aleksei Kurkin from the Centre for Financial Research and Data Analytics at the HSE Faculty of Economic Sciences has been published in Socio-Economic Planning Sciences.

'Even among Geniuses, Luck Plays a Role in Winning a Nobel Prize'

Denis Bodrov studies particle physics and works at one of the four electron–positron colliders in the world. In this interview with the HSE Young Scientists project, he talks about his efforts to go beyond the Standard Model, discusses tau leptons, and shares his affection for Moscow.

Larger Groups of Students Use AI More Effectively in Learning

Researchers at the Institute of Education and the Faculty of Economic Sciences at HSE University have studied what factors determine the success of student group projects when they are completed with the help of artificial intelligence (AI). Their findings suggest that, in addition to the knowledge level of the team members, the size of the group also plays a significant role—the larger it is, the more efficient the process becomes. The study was published in Innovations in Education and Teaching International.

New Models for Studying Diseases: From Petri Dishes to Organs-on-a-Chip

Biologists from HSE University, in collaboration with researchers from the Kulakov National Medical Research Centre for Obstetrics, Gynecology, and Perinatology, have used advanced microfluidic technologies to study preeclampsia—one of the most dangerous pregnancy complications, posing serious risks to the life and health of both mother and child. In a paper published in BioChip Journal, the researchers review modern cellular models—including advanced placenta-on-a-chip technologies—that offer deeper insights into the mechanisms of the disorder and support the development of effective treatments.

Using Two Cryptocurrencies Enhances Volatility Forecasting

Researchers from the HSE Faculty of Economic Sciences have found that Bitcoin price volatility can be effectively predicted using Ethereum, the second-most popular cryptocurrency. Incorporating Ethereum into a predictive model reduces the forecast error to 23%, outperforming neural networks and other complex algorithms. The article has been published in Applied Econometrics.

Administrative Staff Are Crucial to University Efficiency—But Only in Teaching-Oriented Institutions

An international team of researchers, including scholars from HSE University, has analysed how the number of non-academic staff affects a university’s performance. The study found that the outcome depends on the institution’s profile: in research universities, the share of administrative and support staff has no effect on efficiency, whereas in teaching-oriented universities, there is a positive correlation. The findings have been published in Applied Economics.

Physicists at HSE University Reveal How Vortices Behave in Two-Dimensional Turbulence

Researchers from the Landau Institute for Theoretical Physics of the Russian Academy of Sciences and the HSE University's Faculty of Physics have discovered how external forces affect the behaviour of turbulent flows. The scientists showed that even a small external torque can stabilise the system and extend the lifetime of large vortices. These findings may improve the accuracy of models of atmospheric and oceanic circulation. The paper has been published in Physics of Fluids.

Solvent Instead of Toxic Reagents: Chemists Develop Environmentally Friendly Method for Synthesising Aniline Derivatives

An international team of researchers, including chemists from HSE University and the A.N. Nesmeyanov Institute of Organoelement Compounds of the Russian Academy of Sciences (INEOS RAS), has developed a new method for synthesising aniline derivatives—compounds widely used in the production of medicines, dyes, and electronic materials. Instead of relying on toxic and expensive reagents, they proposed using tetrahydrofuran, which can be derived from renewable raw materials. The reaction was carried out in the presence of readily available cobalt salts and syngas. This approach reduces hazardous waste and simplifies the production process, making it more environmentally friendly. The study has been published in ChemSusChem.